Gandalf Corvotempesta

2016-01-10 13:12:21 UTC

I'm trying to use v4 to backup a couple of test server.

Server 1 has 150GB of datas to backup.

Plain rsync copy everything in about 14-15 hours.

Bacula copy everything in 15 hours and 46 minuts (based on last backup email)

BPC is still running from 48 hours. The whole copy lasted for 25

hours, now is running "fsck #1" from yersterday

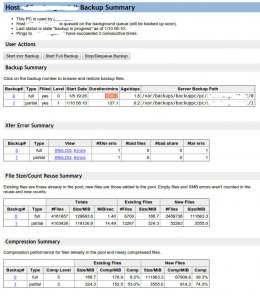

Something strange is going on, in host summary page I can see 1 full

backup (#0), filled=yes, level=0, lasted for 25 hours (about 1540.2

minutes), then a "partial" backup (#1), filled=yes, level=1,

duration=137

Is the partial backup a "good one"? Should't it be an incremental ?

Why both are "filled"? If I understood properly, only the last one is

filled.

I've uploaded two image (some info were removed for posting). In

server summary, i'm referring to the first item (the one with fsck

running), the second one is a new server with first full backup

running right now.

Loading Image...

Loading Image...

is everything ok and working as expected ? Because having backups

running more than 38 hours is not normal:

http://pastebin.com/raw/nXuUF9sA

srv1 dump is running from 16/01/09 @ 0:26

------------------------------------------------------------------------------

Site24x7 APM Insight: Get Deep Visibility into Application Performance

APM + Mobile APM + RUM: Monitor 3 App instances at just $35/Month

Monitor end-to-end web transactions and take corrective actions now

Troubleshoot faster and improve end-user experience. Signup Now!

http://pubads.g.doubleclick.net/gampad/clk?id=267308311&iu=/4140

_______________________________________________

BackupPC-users mailing list

BackupPC-***@lists.sourceforge.net

List: https://lists.sourceforge.net/lists/listinfo/backuppc-users

Wiki: http://backuppc.wiki.sourceforge.net

Project: http://backuppc.sourceforge.net/

Server 1 has 150GB of datas to backup.

Plain rsync copy everything in about 14-15 hours.

Bacula copy everything in 15 hours and 46 minuts (based on last backup email)

BPC is still running from 48 hours. The whole copy lasted for 25

hours, now is running "fsck #1" from yersterday

Something strange is going on, in host summary page I can see 1 full

backup (#0), filled=yes, level=0, lasted for 25 hours (about 1540.2

minutes), then a "partial" backup (#1), filled=yes, level=1,

duration=137

Is the partial backup a "good one"? Should't it be an incremental ?

Why both are "filled"? If I understood properly, only the last one is

filled.

I've uploaded two image (some info were removed for posting). In

server summary, i'm referring to the first item (the one with fsck

running), the second one is a new server with first full backup

running right now.

Loading Image...

Loading Image...

is everything ok and working as expected ? Because having backups

running more than 38 hours is not normal:

http://pastebin.com/raw/nXuUF9sA

srv1 dump is running from 16/01/09 @ 0:26

------------------------------------------------------------------------------

Site24x7 APM Insight: Get Deep Visibility into Application Performance

APM + Mobile APM + RUM: Monitor 3 App instances at just $35/Month

Monitor end-to-end web transactions and take corrective actions now

Troubleshoot faster and improve end-user experience. Signup Now!

http://pubads.g.doubleclick.net/gampad/clk?id=267308311&iu=/4140

_______________________________________________

BackupPC-users mailing list

BackupPC-***@lists.sourceforge.net

List: https://lists.sourceforge.net/lists/listinfo/backuppc-users

Wiki: http://backuppc.wiki.sourceforge.net

Project: http://backuppc.sourceforge.net/